Universal Code of Conduct/2021 consultations/Enforcement/Wikimedia Commons community/fr

Wikimedia Commons (commonly referred to as Commons) is a free media repository that also acts as the common media repository for all Wikimedia projects. It was launched on 7 September 2004. Wikimedia Commons has a multilingual and highly active community. It has more than 42,000 active users, 210 administrators, 7 bureaucrats, 5 checkusers, and 4 oversighters. As of March 2021, it has more than 70 million content files and more than 68 million content pages.

Commons est un projet Wikimedia unique à bien des égards. Contrairement aux Wikipédias, où le travail collaboratif est assez courant, Commons est surtout individualiste lorsqu'il s'agit de développer du contenu. C'est principalement parce que la plupart des gens prennent et mettent en ligne leurs images et leurs vidéos par eux-mêmes, et dans la plupart des cas, cela ne nécessite pas ou peu d'interaction avec les autres. Cependant, des collaborations se produisent en termes de concours photographiques, d'édition et d'amélioration d'images, de demandes et de développements d'illustrations, de gestion de catégories, etc.

As a multilingual community, it also has 50+ non-English village pumps for supporting users of various languages, which is also unique to other Wikimedia projects. Many well experienced Commons contributors consider other language projects as their home projects, and many of them hold advanced user rights in both projects. This helps the Commons community to be informed and aware of usable ideas in many aspects of project governance.

Behavioral policies

As of March 2021, Wikimedia Commons has the following ratified behavioral policies and guidelines. The existing policies seem to work fine for the project. However we found in the consultation that the project lacks in community ratified policies and guidelines.

| Behavioral policies | Behavioral guidelines |

|---|---|

In addition to official policies and guidelines, there are some essays written by volunteer contributors that do not have any official status, and the contributors are not obligated to maintain. Such essays include staying mellow, not staying mellow, no personal attack, civility, harassment, ignore all rules, canvassing, etc.

Facilitation process

The community was reached out in several steps. Before the consultation, several active and experienced community members were contacted and informed about the future UCoC enforcement consultation. The consultation started on 18 January 2021. The consultation took place on a dedicated page on Wikimedia Commons. After beginning the consultation, a message was posted on the main Village Pump to inform all community members. Similar announcements were sent to the Commons mailing list, Telegram group, and IRC channels. A few experienced users and active ANU contributors were notified on their talk pages.

After a few days of initial insights, a few basic enforcement questions were asked to the community members to ask their opinion on how UCoC can be enforced in different challenging situations. A few days later, the advanced user rights holders (i.e., administrators, bureaucrats, checkusers, etc.) were contacted on their talk pages via message delivery tool for their expert opinion. As the on-wiki discussion thrives, a Google Form survey was sent to allow users to provide their opinion anonymously.

The survey link was posted on the village pumps, administrators’ talk pages, and header on the consultation page. Being a multicultural project, Commons has language-specific village pumps. Fifty local language village pumps were also notified about the on-wiki UCoC consultation and the survey. The consultation officially ended on 28 February 2021.

The overall response rate was good. The on-wiki consultation was very engaging and active till the last day of the consultation. Several community members did not support using Google Forms as a survey platform; however, it got some good and valuable insights from fellow community members.

To get feedback from users who report behavioral issues and users who deal with mediating/solving user problems, several users active on Administrators’ Noticeboard/User problems (ANU) were contacted via talk page, chat, and email to provide their insights on the consultation page. Almost all responded positively and provided their feedback on the consultation page, email reply, and/or one-to-one discussion.

Feedback summary

Feedback on the policy text

I think it would be beneficial if explicitly would have been stated what diversity stands for, and how members of the community are meant to deal with for instance potential conflicts between say LHBQTI and religious beliefs.

We found that users find the UCoC policy text is less useful in its current state. The policy text is vague and has many ambiguities, therefore writing improvements is required. Participants emphasised clearing/elaborating the meaning of many keywords like ‘vulnerable’, ‘accessible’, and ‘diversity’ to avoid conflicts between groups and cultures. There is also concern that the code’s vagueness can be an issue to determine if some conduct is sanctionable.

Peer support and support for targets of harassment

There is a mixed opinion on the functionality of the peer support group. In some cases, some community members think it is not helpful because there are issues that cannot be reported or resolved publicly. In other instances, peer support groups can be helpful, and there is a proposal of creating an ‘Empathy OTRS’ channel to support those in need. We also see suggestions for a safeguarding team that can provide support to victims. A safeguarding team can consist of on-wiki volunteers, affiliate members, and staff who will be skilled and trained to guide the victims and avoid harm. It is worth mentioning that those who provide such support through peer support groups or safeguarding teams are also prone to harassment. Therefore, they need to have the proper training to protect themselves. We also see suggestions to hire professionals who can provide expert support in conflict management.

Enforcement body

La plupart des contributeurs préfèrent voir les administrateurs comme le principal organe d'exécution. C'est également la solution adoptée actuellement. Nous constatons également un soutien considérable en faveur d'un organe local dédié à la résolution des problèmes que les administrateurs locaux ne peuvent pas résoudre. Nous avons constaté que, bien qu'une certaine forme de comité pour arbitrer les problèmes de comportement soit nécessaire, il existe des préoccupations telles que le temps de traitement long, l'influence des membres du comité par d'autres personnes, et les compétences et l'expertise des membres sont les principaux obstacles à la réussite d'un comité.

Reporting harassment

Commons has particular issues with the identification of users, privacy concerns, and their interaction with copyright legislation which will need particular care and concern to best handle.

UCoC enforcement starts with reporting. Currently, Commons uses Administrators’ Noticeboard/User problems (ANU) to report all user-related issues. Not all of them are in the scope of UCoC, but conduct issues are also reported there. Many minor conduct issues are reported and resolved on individual talk page discussions. The community prefers an easy reporting system.

To make reporting more accessible, a new MediaWiki design has been suggested. There is an insight about the ‘Report harassment’ button in Tagalog Wiktionary. Additionally, an idea about reporting users through the ‘Report this user’ button has been suggested. There are suggestions to have an individual wiki (SafetyWiki) to report harassment that occurred outside Wikimedia projects but related to their wiki contributions/role. T&S can provide staff time to check if the guidance is sufficient. To prevent misuse of the reporting system, providing diffs can be compulsory. Furthermore, feature development has been suggested to tag specific diffs as harassment edits to be categorized for further investigations.

The consultation finds that, despite being a highly active and mature community, it lacks when it comes to reporting harassment. It has only one public page for reporting harassment and no private channel to report. Commonly, reporters are subjected to further harassment when they go through public channels. We see several suggestions to create a confidential reporting system.

Harassment outside Wikimedia projects

The consultation finds harassment outside Wikimedia projects as a major challenge for UCoC enforcement. We see that verifiability of allegations is an issue as it might be complicated as evidence might not be public or accessible to act or mediate.

To resolve incidents that occur outside Wikimedia projects, it’s been suggested to establish a legal presence through partner NGOs so they can take care of legal proceedings through them. On-wiki specific policy provision is recommended to allow sanctions for off-wiki harassments.

False allegations

To prevent false allegations, strict measures like community sanctions after multiple false allegations have been proposed. We see that some users see UCoC as a weapon to harass someone through false allegations. Providing evidence (through diff in case of on-wiki evidence) should be mandatory for any kind of reports. Provocation behind the alleged user should also be considered as there are incidents of circular harassment, in which case someone harasses back when they get harassed in the first place.

Transparency of reporting and actions by WMF

Transparency is paramount, all users involved should be able to state their cases and defend themselves (preferably publicly) against all allegations. The system cannot succeed if it's not open, Wikimedia Commons should be as open as possible so all actions can receive fair scrutiny.

There are concerns from the community on the transparency of WMF’s actions, especially the global bans. The community is not aware of the reason for the bans. It is also unclear to the community if the actions were justified or to defend themselves. It has been suggested to consult the community where the accused user is active and consider their opinion before placing a global ban due to unacceptable conduct elsewhere.

The majority of participants have very little confidence in WMF's intention and ability to provide expected safety and protection to the volunteers facing harassment. The community is also concerned about the lack of transparency behind WMF's actions and fears the UCoC will be weaponized to keep more users out of Wikimedia space. The community would like to see WMF empowering the community and increasing transparency of its actions. Due to the overall lack of trust in WMF, many participants feel having a new code of conduct may not provide the desired protection they seek from WMF—especially in case of off-wiki harassment.

Survey

The survey received 75 responses. It had a combination of single and multiple-choice questions, short questions, and elaborative questions. Most participants provided responses to most questions.

-

Participants by gender identities

-

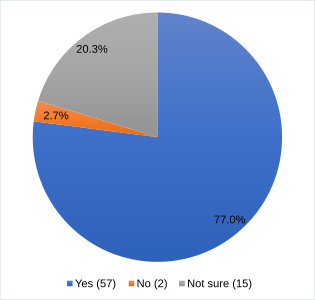

While most participants are familiar with existing behavioral policies, but a sizeable portion are not sure

-

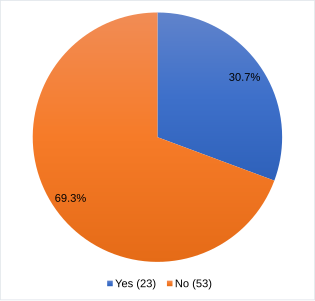

Most participants prefer a private reporting system to avoid further harassments

-

Almost half of the participants think it is not easy to report harassment

-

More than half of the participants support UCoC and a sizable portion is neutral to it

-

More than one-fourth of the participants faced off-wiki harassment

Conclusion

Wikimedia Commons est un projet Wikimedia exceptionnel dont l'existence forte et fonctionnelle est vitale pour la pérennité de tous les sites Wikimedia. Il dispose de quelques politiques et directives comportementales de base, mais il lui en manque beaucoup d'autres qui poussent de nombreux utilisateurs à agir ou à appliquer les politiques qu'ils connaissent et avec lesquelles ils sont familiers dans leurs projets d'origine. Étant multilingue avec une base d'utilisateurs culturellement diversifiée, cette pratique n'aide pas à prendre des décisions et des résultats uniformes. Malgré la grande quantité de contenus et les défis qui y sont liés, sa gouvernance manque de ressources humaines actives. Ainsi, les utilisateurs actifs sont submergés par leur charge de travail et sont également susceptibles d'être confrontés à un mauvais comportement en raison de leurs actions de bonne foi.

We observe major concerns regarding UCoC policy text whose lack of clarity can be a challenge for proper site-wide enforcement at the end. Commons has an active on-wiki public reporting system and enforcement mechanism. However, many consultation participants indicated some serious limitations to this public reporting system and are in favor of a private reporting and arbitration process that can help the victims get the desired support without being harassed or attacked further. Although concerns of a bureaucratic, time-consuming, and flawed arbitration process remain.

Due to the project's nature of having a substantial number of lone contributors, a friendly and active peer support group and/or safeguarding team is deemed necessary for both protection and guidance of the vulnerable. Language and cultural diversity brought both positive opportunities and challenges to the project. Commons is gifted with its diverse and experienced community members hailing from other Wikimedia projects who deliver valuable experiences, ideas, skills, up-to-date information, and historic references from different cultures and project types. At the same time, it also brings local problems and disputes to Commons that burden the project and consumes valuable volunteer time.

Additionally, we see a sizable frustration from the community about WMF not providing adequate support to volunteers who face hardship, especially due to off-wiki harassments. The community wishes WMF to be more transparent in its actions and make sure they receive fair scrutiny. Despite the confusion and distrust on many matters and actions, several members are optimistic about WMF's good intention of creating a movement-wide effective code of conduct that can actually help the community by providing the support the community so deserves and desires.