IP Editing: Privacy Enhancement and Abuse Mitigation/Improving tools/my

Background

Our goal for this project is two-fold:

- First, to protect our projects from vandalism, harassers, sockpuppets, long-term abuse vandals, disinformation campaigns and other disruptive behaviors.

- Second, to protect our unregistered editors from persecution, harassment and abuse by not publishing their IP addresses.

Based on our conversations on the project talk page and elsewhere, we have heard of the following ways in which IP addresses are being used on our projects:

- IP addresses are helpful in looking for “nearby” editors – who are editing from the same or a nearby IP range

- They are used to look up the contribution history of an unregistered editor

- IP addresses are useful in finding cross-wiki contributions

- They are useful to figure out if someone is trying to edit from a VPN or a Tor node

- They are useful to look up the location of an editor, including factoids like their university/company/government agency

- IP addresses are used to see whether an IP address is linked to a known long-term abuser (LTA)

- They are sometimes used to set specific abuse-filters to deter specific kinds of spam

- IP addresses are important for range-blocking

A number of these workflows come into action when we are attempting to see if two user accounts are being used by the same person, sometimes called sockpuppet detection. Using IP addresses to perform sockpuppet detection is a flawed process. IP addresses are getting increasingly dynamic with the increase in the number of people and devices coming online. IPv6 addresses are complicated and ranges are difficult to figure out. To most newcomers, IP addresses appear to be a bunch of seemingly random numbers that don’t make sense, are hard to remember and difficult to make use of. It takes significant time and effort for new users to get accustomed to using IP addresses for blocking and filtering purposes.

Our goal is to reduce our reliance on IP addresses by introducing new tools that use a variety of information sources to find similarities between users. In order to ultimately mask IP addresses without negatively impacting our projects, we have to make visible IP addresses redundant to the process. This is also an opportunity to build more powerful tools that will help identify bad actors.

Proposed ideas for tools to build

We want to make it simpler for users to obtain the information they need from IP addresses in order to do the work they need to. In order to do that, there are three new tools/features we are thinking about.

ဤအင်္ဂါရပ်သည် လက်ရှိတွင် လုပ်ဆောင်နေဆဲဖြစ်သည်။ လိုက်နာရန်၊ ကျေးဇူးပြု၍ ဝင်ရောက်ကြည့်ရှုပါ- [[IP တည်းဖြတ်ခြင်း- ကိုယ်ရေးကိုယ်တာ မြှင့်တင်မှုနှင့် အလွဲသုံးစားမှု လျော့ပါးရေး/ IP အချက်အလက် အင်္ဂါရပ်|IP အချက်အလက် လုပ်ဆောင်ချက်]]။

1. IP info feature

There are some critical pieces of information that IP addresses provide, such as location, organization, possibility of being a Tor/VPN node, rDNS, listed range etc. Currently, if an editor wants to see this information about an IP address, they would use an external tool or search engine to extract that information. We can simplify this process by exposing that information to trusted users on the wiki. In a future where IP addresses are masked, this information will continue to be displayed for masked usernames.

One concern we've been hearing from users we've talked to so far is that it is not always easy to tell whether an IP is coming from a VPN or belongs to a blacklist. Blacklists are fragile – some are not very updated, others can be misleading. We are interested to hear in what scenarios would it be helpful for you to know if an IP is from a VPN or belong to a blacklist and how do you go about looking up that information that right now.

Benefits:

- This would eliminate the need for users to copy-paste IP addresses to external tools and to extract the information they need.

- We expect this will cut down on the time spent on fetching the data considerably too.

- In the long run, it would help reduce our dependence on IP addresses, which are hard to understand.

အန္တရာယ်များ

- Based on the implementation, we risk exposing information about IPs to a larger group of people than just the limited set of users who are currently aware of how IP addresses operate.

- Depending on what underlying service we use for getting the details about an IP, it is possible that we may not be able to have translated information, but show information in English.

- There is a risk of users misunderstanding if the organization/school was behind the edit, rather than the individual who made the edit.

2. Finding similar editors

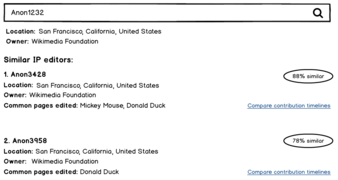

To detect sockpuppets (and unregistered users), editors have to go to great lengths to figure out if two users are the same. This involves comparing the users’ contributions, their location information, editing patterns and much more. The goal for this feature will be to simplify this process and automate some of these comparisons that can be made without manual labor.

This would be done with the help of a machine learning model that can identify accounts demonstrating a similar behavior. The model will be making predictions on incoming edits that will be surfaced to checkusers (and potentially other trusted groups) who will then be able to verify that information and take appropriate measures.

We could potentially also have a way to compare two or more given unregistered users to find similarities, including seeing if they are editing from nearby IPs or IP ranges. Another opportunity here is to allow the tool to automate some of the blocking mechanisms we use – like automatic range detection and suggesting ranges to block accordingly.

A tool like this holds a lot of possibilities—from identifying individual bad actors to uncovering sophisticated sockpuppeting rings. But there is also a risk of exposing legitimate sock accounts who want to keep their identity secret for various reasons. This makes this project a tricky one. We want to hear from you about who should be using this tool and how can we mitigate the risks.

With the help of the community, such a feature can evolve to compare features that editors currently use when comparing editors. One possibility is also to train a machine learning model to do this (similar to how ORES detects problematic edits).

Here’s one possibility for how such a feature might look in practice:

-

Finding similar editors with IPs

-

Finding similar editors with masked IPs

Benefits:

- Such a tool would greatly reduce the time and effort from our functionaries to find bad-faith actors on our projects.

- This tool could also be used to find common ranges between known problem editors to make blocking IP ranges easier.

Risks:

- If we use Machine Learning to detect sockpuppets, it should be very carefully monitored and checked for biases in the training data. Over-reliance on the similarity-index score should be cautioned against. It is imperative that human review be part of the process.

- Easier access to information such as location can sometimes make it easier, not more difficult, to find identifiable information about someone.

3. A database for documenting long-term abusers

Long-term abuse vandals are manually documented on the wikis, if they're documented at all. This includes writing up a profile of their editing behaviors, articles they edit, indicators for how to recognize their sock accounts, listing out all the IP addresses used by them and more. With numerous pages spanning the IP addresses used by these vandals, it is increasingly a mammoth task to search through and find relevant information when needed, if it is available. A better way to do this could be to build a database that documents the long-term abusers.

Such a system would facilitate easy cross-wiki search for documented vandals matching search criteria. Eventually, this could potentially be used to automatically flag users when their IPs or editing behaviors are found to match those of known long-term abusers. After the user has been flagged, an admin could take necessary action if that seems appropriate. There is an open question about whether this should be public or private or something in-between. It is possible to have permissions for different levels of use for read and write access to the database. We want to hear from you about what would you think would work best and why.

Cost:

- Such a database would need community members to participate in populating it with the currently known long-term abusers. This can be a significant amount of work for some wikis.

Benefits:

- Cross-wiki search for documented long-term abusers would be an enormous benefit over the current system, reducing a lot of work for patrollers.

- Automated flagging of potentially problematic-actors based on known editing patterns and IPs would come in handy in a lot of workflows. It would allow admins to make judgements and actions based on the suggested flags.

Risks:

- As we build such a system, we would have to think hard about who has access to the database data and how we can keep it secured.

These ideas are at a very early stage. We want your help with brainstorming on these ideas. What are some costs, benefits and risks we might be overlooking? How can we improve upon these ideas? We’d love to hear from you on the talk page.

Existing tools used by editors

On-wiki tools

- CheckUser: CheckUser allows a user with a checkuser flag to access confidential data stored about a user, IP address, or CIDR range. This data includes IP addresses used by a user, all users who edited from an IP address or range, all edits from an IP address or range, User agent strings, and X-Forwarded-For headers. Most commonly used for detecting sockpuppets.

- Allow checkusers to have access to which users have over 50 accounts on the same email. The existence of those was confirmed in phab:T230436 (although the task itself is irrelevant). While this does not affect the IP privacy directly, it could slightly mitigate the effect of harder abuse management.

Project-specific tools (including bots and scripts)

Please specify what project the tool is used on, what it does and include link if possible

External tools

ToolForge tools

- Intersect contribs

- WHOIS and reverse DNS

- Editor interaction analyser – Analyse interactions between two or three users – activity on same pages, during the same time etc.

- IPCheck: Allows you to look up information about an IP address including if it is a a proxy, tor node or potential VPN.

- GUC – Global user contributions for any user.

- Reverse DNS for a range

Third-party tools

- Major IP address blocks: http://www.nirsoft.net/countryip/cz.html

- User agent string lookup: http://www.useragentstring.com/

- Nmap

- Spamhaus lists and XBL (Exploits blacklist)

- Talos – IP reputation (mainly for email spam)